PhD thesis defense to be held on May 16, 2025 at 12:00 (Multimedia Amphitheater, Central Library Ground Floor, Zografos campus)

Picture Credit: Ioannis Zorzos

Thesis title: TECHNIQUES FOR IMPROVING THE EXPLANATORY POWER OF ARTIFICIAL INTELLIGENCE SYSTEMS ON ELECTROENCEPHALOGRAM DATA

Abstract: Electroencephalography (EEG)-based brain-computer interface (BCI) systems have garnered increasing interest for their capacity to decode brain activity into actionable signals for assistive technologies and clinical diagnostics. However, the performance and interpretability of conventional EEG analysis pipelines often remain limited due to the inherent complexity of neural signals, the vulnerability of EEG recordings to noise, and the black-box nature of advanced machine learning approaches. In this dissertation, we introduce a comprehensive framework that addresses these challenges by combining source localization and deep learning architectures with cutting-edge explainability techniques.

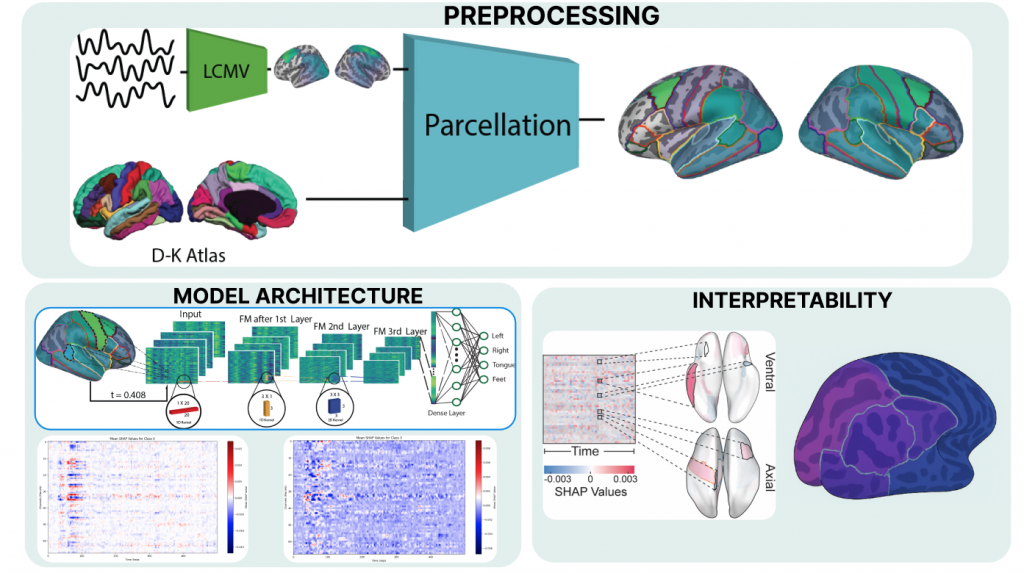

Central to our methodology is the integration of beamformer-based source localization, which projects EEG signals from the sensor space to the source space, thereby enhancing the anatomical relevance of the resulting features. Alongside this spatial mapping, we employ the Continuous Wavelet Transform (CWT) to extract rich time-frequency representations. These combined spatiotemporal features serve as input to specialized convolutional neural networks (CNNs) and fully connected neural networks designed to capture the highly dynamic and distributed characteristics of brain activity. This multi-level preprocessing strategy not only increases model performance but also creates a more interpretable feature space linked to distinct cortical regions and oscillatory patterns.

To address the black-box nature of deep neural networks, two influential explainability methods—SHapley Additive exPlanations (SHAP) and Integrated Gradients—are applied. SHAP offers granular, feature-level attributions, thereby illuminating the specific frequency bands and time windows most salient for the model’s predictions. By contrast, Integrated Gradients connects model decisions to anatomically localized brain sources, facilitating a neuroscientifically grounded interpretation. This dual explainability paradigm reveals complementary insights: whereas SHAP delineates critical oscillatory features, Integrated Gradients identifies the cortical regions that govern the classification outcomes.

Empirical evaluations utilize the BCI Competition IV 2a dataset, and Physionet BCI. Our results consistently outperform traditional baselines—ranging from support vector machines (SVM) and linear discriminant analysis (LDA) to sensor-level neural network architectures—across metrics such as accuracy, precision, and F1-score. Furthermore, statistical significance testing confirms the superiority of source-localized time-frequency models over simpler pipelines. Despite inherent challenges, including the relatively small subject pool, data artifacts, and the computational intensity demanded by source localization and interpretability computations, the study successfully highlights how merging advanced signal processing with targeted machine learning can both enhance as well as reatin classification performance and provide valuable physiological insights.

Looking ahead, future work envisions extending the approach to more diverse populations, larger EEG datasets, and clinical contexts such as epilepsy, neurodegenerative conditions, and personalized rehabilitation protocols. Efforts will also focus on refining artifact rejection procedures, incorporating emerging neural architectures like graph neural networks, and further improving explainability tools through hybrid or counterfactual analyses. By bridging methodological rigor and neuroscientific relevance, this research lays a foundation for the development of explainable, high-performance EEG-based BCIs that are robust, interpretable, and clinically actionable.

Supervisor: Professor George Matsopoulos

PhD Student: Ioannis Zorzos