PhD Thesis Final Defense to be held on July 11, 2018 at 11:00

Moraitis Thesis Image

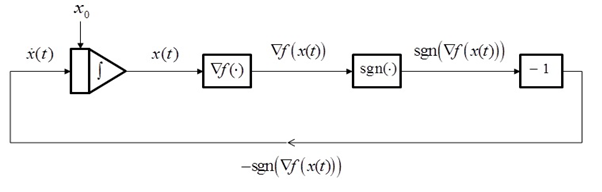

Thesis Title: Τhe Sign recurrent neural network for unconstrained minimization.

The examination is open to anyone who wishes to attend (Room 2.2.29, Old ECE Building).

Abstract

In this Thesis, the sign dynamical system for unconstrained minimization of a continuously differentiable function f is examined. This dynamical system has a discontinuous right hand side and, in this Thesis, it is interpreted here as a reccurent neural network which we name the Sign Neural Network. By using Filippov’s approach, we first prove asymptotic convergence of the Sign Neural Network. Also, finite-time convergence of the solutions is established and an improved upper bound for convergence time is given.

A first contribution of this Thesis is a detailed calculation of Filippov’s set-valued map for the Sign Neural Network in the general case, i.e. without any restrictive assumptions on the function f to be minimized. Convergence of the solutions to stationary points of f follows by using standard results, i.e. a generalized version of LaSalle’s invariance principle. Next, in order to prove finite-time convergence of solutions, the applicability of standard results is extended so that they can be applied to the Sign Neural Network. Finally, while establishing finite-time convergence, a novel proving procedure is introduced which (i) allows for milder assumptions to be made on the function f , and (ii) results in an improved upper bound for the convergence time.

Numerical experiments confirm both the effectiveness and finite-time convergence of the Sign Neural Network.

Supervisor: Maratos Nicholas, Professor

PhD student: Moraitis M.